Defining DevOps – what it should be, and if it should even be done – has become a surprising controversy over the past five years. The “godfather” of DevOps himself, Patrick Debois, famously resists any kind of formal definition. He thinks it comes down to culture:

The Devops movement is built around a group of people who believe that the application of a combination of appropriate technology and attitude can revolutionize the world of software development and delivery. The demographic seems to be experienced, talented 30-something sysadmin coders with a clear understanding that writing software is about making money and shipping product. More importantly, these people understand the key point – we’re all on the same side! All of us – developers, testers, managers, DBAs, network technicians, and sysadmins – are all trying to achieve the same thing: the delivery of great quality, reliable software that delivers business benefit to those who commissioned it. [debois]

This is great, but it’s hardly definitive. Just look at the Agile Manifesto for example. This gave us a definition of what Agile is (or more correctly, how it behaves) and the guiding principles behind it. Most have stood the test of time; more importantly, it’s a firm stake in the ground. We learn as much from the holes and the understressed points as we do from the things that have stuck over time. The Agile Manifesto and its underlying principles have been one of the most impactful and successful set of concepts in the 21st century in most organizations. DevOps is very much just an extension of Agile; it’s incomprehensible to us that we should deviate from this successful model and pretend that a DevOps Manifesto should be considered impossible or too formulaic.

For us personally, we find an exact definition of DevOps in terms of what it is to be elusive. Not for the lack of trying – many very experienced and brilliant people have taken stabs at it over the years. One of our most prominent thought leaders, Gene Kim, has defined it in the past as:

The emerging professional movement that advocates a collaborative working relationship between Development and IT Operations, resulting in the fast flow of planned work (i.e., high deploy rates), while simultaneously increasing the reliability, stability, resilience and security of the production environment. [kim3]

… a very good definition; it captures the elements of partnership between dev and Ops, starts with people, and ends with the results – a fast flow of work and increased quality and stability.

|

Some Other DevOps Definitions

|

|

Wikipedia offers this definition:

DevOps (a clipped compound of “development” and “operations”) is a software engineering culture and practice that aims at unifying software development (Dev) and software operation (Ops). The main characteristic of the DevOps movement is to strongly advocate automation and monitoring at all steps of software construction, from integration, testing, releasing to deployment and infrastructure management. DevOps aims at shorter development cycles, increased deployment frequency, more dependable releases, in close alignment with business objectives.[i]

In “DevOps: A Software Architect’s Perspective” the authors define DevOps as:

DevOps is a set of practices intended to reduce the time between committing a change to a system and the change being placed into normal production, while ensuring high quality.

Gartner has offered this definition:

DevOps represents a change in IT culture, focusing on rapid IT service delivery through the adoption of agile, lean practices in the context of a system-oriented approach. DevOps emphasizes people (and culture), and seeks to improve collaboration between operations and development teams. DevOps implementations utilize technology — especially automation tools that can leverage an increasingly programmable and dynamic infrastructure from a life cycle perspective. [gartner]

From Damon Edwards;

DevOps is… an umbrella concept that refers to anything that smooths out the interaction between development and operations. [damon]

The Agile Admin:

DevOps is the practice of operations and development engineers participating together in the entire service lifecycle, from design through the development process to production support. DevOps is also characterized by operations staff making use many of the same techniques as developers for their systems work. [agileadmin]

And from Rob England, aka the IT Skeptic – who we interviewed earlier in this book:

DevOps is agile IT delivery, a holistic system across all the value flow from business need to live software. DevOps is a philosophy, not a method, or framework, or body of knowledge, or *shudder* vendor’s tool. DevOps is the philosophy of unifying Development and Operations at the culture, system, practice, and tool levels, to achieve accelerated and more frequent delivery of value to the customer, by improving quality in order to increase velocity. [itskeptic]

Or Ken Mugrage of ThoughtWorks:

“DevOps: A culture where people, regardless of title or background, work together to imagine, develop, deploy and operate a system.” [mug2]

Putting all these definitions together, we’re starting to see a common thread around how important it is to prepare the ground and consider the role of culture. Perhaps Adam Jacobs takes a position similar to ours, saying that the exact definition may be best described by behavior:

“DevOps is a cultural and professional movement. The best way to describe devops is in terms of patterns and anti-patterns.”

… which is exactly what we’ve tried to do in our upcoming book.

|

Other definitions can be found in the sidebar “Some Other DevOps Definitions”. Suffice to say, there’s lots to choose from, and we won’t tell you what’s best. We can tell you our favorite though – from Donovan Brown:

“DevOps is the union of people, process, and products to enable continuous delivery of value to our end users.” [donovan]

This is exactly the right order of things and there’s not a wasted word; we can’t improve on it. This is what’s used in Microsoft as a single written definition, as it reflects what we want and value out of DevOps. Having that single definition of truth published and visible helps keep everyone on the same page and thinking more holistically.

It seems unlikely that community consensus on a single unified definition of DevOps will ever happen. The purist, engineer part of us hates this; but as time went on we realized from our research and interviews that this apparent gap was ultimately not important, and in fact was beneficial. At one conference, we remember the speakers asking the crowd how many of them were ‘doing Agile’ – about 300 hands went up, the entire audience. Then the speakers asked, a little condescendingly, “OK, now which of you are doing it right?” – and three people kept their hands up, who were then ridiculed for being bold-faced liars!

At the time we remember feeling a little shocked that so few were adhering to the stone tablets brought down from the mountain by Ken Schwaber and company. Now, we realize how shortsighted and rigid that point of view was. Agile should never have been thought of as a list of checkmarks or a single foolproof recipe. It’s likely that most of those 300 people in the audience were better off because of adopting some parts of scrum or Agile in the form of transparency and smaller amounts of work, and were building on that success. That’s far more important than ‘doing it right’.

The same holds true with DevOps and the principles behind continuous delivery. No single definition of ‘doing DevOps right’ exists, and it likely never will. What we realized in gathering information from this book was that this gap is fundamentally not important. A global definition of DevOps isn’t possible or helpful; your definition of DevOps however is VERY important. Put some thought into what DevOps means in specific terms for your specific situation, defining it, and making it explicit. Having that discussion as a group and coming up with your own definition – or piggybacking on one of the above thoughts – is time well worth spending. Over time you’ll find that the exact “what” shrinks as you focus more on the “why” and the “how” of continually improving your development processes to drive more business value and feedback quality.

…But We Do Know How It Behaves

A Manifesto is a public declaration of policy, a declaration or announcement; here we’re standing on the shoulder of the giants that came up with the brilliant Agile Manifesto – easily the most groundbreaking and impactful set of principles in the software development field in the past thirty years.

Since the Agile Manifesto was written in 2001, we’ve learned some fault points and pitfalls with Agile implementations and its guiding principles[1]:

|

Strengths

|

Weaknesses

|

|

Processes and tools placed in second position to the makeup of the team, including direct communications and self-organization

|

Continuous delivery is (rightly) stressed; most development shops would ignore this with complex branching structures and rigid gates, causing long integration periods and infrequent releases.

|

|

Priorities are set by the business with regular checkpoints

|

“Deliver working software frequently” sabotaged by gap in covering QA/test and lack of automation/maturity in Operations, and siloed traditional org structures

|

|

Excessive documentation and lengthy requirements gathering shelved in favor of responding to change

|

Exploding technical debt caused by ignoring the principle “continuous attention to technical excellence and good design” in favor of velocity

|

|

Sprint retrospectives, daily scrums, and other artifacts showing accountability and transparency

|

Agile practices work best with small units and don’t address either epic/strategic level planning (besides “responding to change”) and how to scale effectively in large organizations. (SAFe is making great headway in addressing this)

|

|

Time boxed development periods followed by releases – the shorter the better (1-4 weeks)

|

|

|

High trust teams (“give motivated individuals the environment and support they need, and trust them to get the job done.”)

|

|

|

Simplicity – the art of maximizing the amount of work not done – addresses the recurring shame of the software industry: most features as delivered are not used or do not meet business requirements

|

|

|

Reflection (tuning and adjusting) key to building a learning / iteratively improving culture

|

|

For being (as of this writing) nearly 20 years old, this set of principles has weathered amazingly well. Most problems we’ve seen to date have been caused by misapplications, not with the thinking of the original architects themselves.

You’ll notice though that we didn’t stop there. However far-seeing and visionary the original signers were of the Agile Manifesto, there were some gaps exposed over the past ten years that need to be addressed. For starters, the Agile Manifesto favored individual interactions over processes and tools (ironic, since to many “Agile” has become synonymous with a tool, Version control, and a process, Daily Scrums and Retrospectives!) Agile was wildly successful in creating tightly focused development teams with a good level of trust-based interactions; the pendulum may have swung too far to the right on the fourth principle on “responding to change”. Companies have had varied success in scaling Agile beyond small working teams; we’ve seen heinous practices like 20-person drum circles and endless daily scrums combined with a complete lack of strategy – doing the wrong thing sprint by sprint with massive amounts of thrash. It is completely possible to execute Agile with a strategic vision and with a good level of planning; this is covered in more depth in a previous chapter.

This first point was more of a flaw in application by companies that misunderstood (or took too far) Agile principles. The second most dangerous flaw in the manifesto however was in what was only mentioned once and is most often overlooked – quality. Consistently, across almost all Agile implementations, we see teams struggling with the outcome of a tight focus on velocity in the form of managing technical debt. Some even have proposed adding a fourth point to the Project Management Triangle of functionality, time, and resources, something we and several others disagree with. (It tends to muddy the waters and imply that quality is a negotiating point with project managers and can be adjusted; successful teams from the days of Lean Manufacturing on build quality into the process as early as possible in the pipeline as their way of doing business; its built-in as a design factor into all project plans and time estimates.) Scrapping excessive documentation and over-specced requirements was a masterstroke; as we have seen, too many orgs have misinterpreted this as meaning “no documentation” and shortchanged QA teams and testing during project crunches, and left their software in a nonworking state for much of the development process.

The third flaw, that tight myopic focus on the development of code, is what DevOps is designed to resolve – which is why DevOps has been called “the second decade of Agile”. We’ve discussed this at length earlier, but we’ll say it again – if it isn’t running, in production, with feedback to the delivery team – it doesn’t count. Agile was meant to deliver working software to production, where continual engagement with stakeholders/product owners would fine-tune features and reduce waste due to misunderstandings. Yet it addressed only software development teams, not the critical last mile of the marathon where software releases are tested, delivered to production, and monitored.

And so, in an attempt to resolve this problem of the “last mile” – along comes DevOps, sprinting to the forefront about ten years after the Agile manifesto was written. (What will we be calling this in ten years more, I wonder?) While the exact definition of DevOps remains in flux – and likely will remain so for some time – there’s a very clear vision of the evils DevOps is attempting to resolve. Stephen Nelson-Smith put it very frankly:

“Let’s face it – where we are right now sucks. In the IT industry, or perhaps to be more specific, in the software industry, particularly in the web-enabled sphere, there’s a tacit assumption that projects will run late, and when they’re delivered (if they’re ever delivered), they will underperform, and not deliver well against investment. It’s a wonder any of us have a job at all!”

Stephen went on to isolate four problems that DevOps is attempting to solve:

- Fear of change (due to a well founded belief that the platform/software is brittle and vulnerable; mitigated by bureaucratic change-management systems with the evil side effect of lengthy cycle times and fix resolution times

- Risky deployments (Will it work under load? So, we push it out at a quiet time and wait to see if it falls over)

- It works on my machine! (the standard dev retort once sysadmins report an issue, after a very cursory investigation) – this is really masking an issue with configuration management

- Siloization – the project team is split into devs, testers, release managers and sysadmins. This is tremendously wasteful as a process as work flows in bits and dribbles (with wait times) between each silo; it leads to a “lob it over the wall” philosophy as problems/blame are passed around between “team” members that aren’t even working in the same location. This “us versus them” mentality that often results leads to groups of people who are simultaneously suspicious of and afraid of each other.

These four problems seem to be consistent and seems to hit the mark of what the DevOps movement – however we define it – is trying to solve. DevOps is all about punching through barriers – large sized, manual deployments that break, firefighting bugs that appear in production due to a messy or inefficient testing suite or mismanaged configurations and ad hoc patches, and long wait times between different siloes in a shared services org.

So, the problem set is defined. Are there common binding principles we can point to that could be as useful as the Agile Manifesto was back in the 2000’s?

The Tolstoy Principle

We keep circling back to the famous opening lines Tolstoy wrote for his masterpiece “Anna Karenina”:

“All happy families are alike; every unhappy family is unhappy in its own way.”

Just as it’s a mistake to be overly prescriptive and recipe-driven with either Agile or DevOps – it would be even worse to repeat the “scrumterfall” antipatterns we’ve seen and throw the last ten years of hard-won lessons and principles out the window because “our company is unique and special / our business won’t allow this”. Tolstoy noted a fact that applies to organizations as well as families: Happy families tend to (even unconsciously) have certain common patterns and elements, well defined roles, and follow a structure that creates the environment for success. Unhappy families tend to have a lot of variance, little discipline (or too much), great inconsistency in how rules are followed, and no introspection or learning so that things iteratively improve.

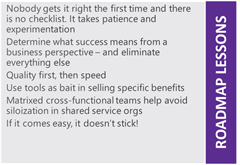

Building on this definition, and thinking back to the Tolstoy Principle (all happy families are alike), we believe there are some common traits found in happy, successful DevOps families:

- Fast release cycles and continuous delivery

- Cross functional small teams responsible for product end-to-end

- Continual learning and customer engagement

- Discipline and a high level of automation

We could be grandiose and call this the DevOps Manifesto -but of course that’s neither possible or really necessary. Let’s just call this what it is – an observation of four key principles you’ll want to include in your vision. This attempts to define DevOps by how it behaves versus a prescriptive process, and we believe it adds on the foundation laid by the simple, neat definition of DevOps we lean towards: “the union of people, process, and products to enable continuous delivery of value to our end users”.

Tolstoy here put his finger on something that applies to organizations as well as families: Happy families tend to have certain common patterns and elements, well defined roles, and follow a structure that creates the environment for success. Unhappy families tend to have a lot of variance, either too much or too little discipline, great inconsistency in how rules are followed, and no introspection or learning so that things iteratively improve.

There’s an abundance of literature and material produced on DevOps and how it has addressed the three gaps above; for us that begins with the inspirational work produced by the “Big Three” of Jez Humble, Gene Kim, and Martin Fowler. Sifting through this mountain of research, we’re humbled by the quality of thought and vast amount of heroic effort that went into completing our Agile journey and eliminating waste in delivering business value faster across our industry. We also believe each presents different facets (or from different points of view) of the four core qualities or principles covered with our DevOps Manifesto above. All happy DevOps families truly are alike.

Let’s break each principle down in more detail:

Fast release cycles and continuous delivery

This is the one KPI that we feel is consistent and tells the most about the true health of a software delivery lifecycle: how long does it take for you to release a minor change to production? This tells you your cycle time; it’s not uncommon for customer requests to be tabled for months or years as development and IT teams are buried in firefighting or a lengthy list of aging stories.

A second question is, How many releases do you deliver, on average, per month? Increasing the frequency of your production releases is the best indicator we know of that a DevOps effort is actually gaining traction. In fact, if this is the only outcome of your DevOps adoption program – that release times are reduced by 50% or more – it’s likely that you can consider your effort an unqualified success.

If you have a fast cycle time, you are living the spirit of DevOps – your teams are delivering software at a fast clip and your customers do not have to wait for unacceptable lengths of time for new value to appear, be tested, and iteratively improved (or discarded if the new feature is not successful).

If you have frequent releases to production – your releases are small, incremental. This means you can quickly isolate and resolve problems without wading through tens of thousands of bundled changes; as the team has practiced releases thousands of times including rollbacks, everyone is comfortable with the release cycle and problems both with code, integration, and threats to your jugular – the release pipeline itself – will be fixed quickly. The old antipattern of the “war room” release with late hours frantically fixing bugs and issuing emergency hotfixes will become a thing of the past.

This goes without saying but just to be clear; by “fast release cycle” we mean fast, all the way, to production. That’s the finish line. A fast release cycle to QA – where it will sit aging on a shelf for weeks or months – gains us nothing. And by “continuous delivery” we mean “no long-running branches outside of mainline”. In the age of Git and distributed development there’s room for flexibility here, but one fact has remained constant since Jez Humble’s definitive book on the subject: lengthy integration periods are both evil and avoidable. It’s perfectly acceptable and perhaps necessary to have a release branch so issues can be reproduced; long running feature branches, almost inevitably, cause much more pain than they are trying to solve. This we will also explore later; suffice to say, we haven’t yet encountered an application that couldn’t be delivered continuously, with a significant amount of automation, direct from mainline. Your developers should be checking into mainline, frequently – multiple times a day – and your testing ecosystem should be robust enough to support that.

Teams that ignore this and build their release pipeline with complex branching strategies end up incurring the wrath of the Integration Gods, whose revenge is terrible; they are afflicted with lengthy and disruptive stabilization periods where the software is left in a nonworking or unreleasable state for long periods of time as long-lived branches are painfully merged with main. The Agile Manifesto focused on delivering working software quickly in contrast to lengthy documentation; the DevOps Manifesto extends this by calling on software delivery teams to deliver that working software, to production, continuously – from mainline.

We did mention multi-month milestones as an antipattern; this ties in with our Agile DNA of favoring responding to change and ongoing customer collaboration over following lengthy waterfall-type delivery plans and hundred-page requirements documentation that ages faster than milk. Still, it’s foolish for us to throw planning out the window and pretend that we are only living in the moment; software is developed tactically but should always adhere to a strategic plan that is flexible but makes sure we are hitting the target versus reactively shifting priorities sprint to sprint. We’ll cover the planning aspects more in a later chapter.

By “fast release cycles” we are very careful not to define what that means for you, exactly. Does it really matter to your customers or business if you can boast about releasing 1,000 or 10,000 releases a day? Of course not; a count of release frequency is a terrible goal by itself, and has nothing to do with DevOps. But as an indicator, it’s a great litmus test – are our environments and release process stable enough to handle more frequent releases? Teams that are on the right track in improving their maturity level usually show it by a slow and steady increase in their release frequency. We’ll point you to Rob England’s story earlier in this book of his public sector customer, whose CIO made an increased rate of release – say every 6 weeks instead of 6 months – a singular goal for their delivery teams. A steadier cadence meant pain, which in turn forced improvement. This worked for them because in their case deployments were their pain point – as Donovan Brown is fond of saying, “If it hurts, do it more often!”

Cross functional small teams

As Amazon CTO Werner Vogels says; “you build it, you run it”.

We’ll get more into team dynamics later. Suffice to say that over the past twenty years the ideal team size has been remarkably well defined – anywhere from 8 to 12 people. Less, and the teams are often too small to do the end-to-end support they’re going to be asked to do; more and team efficiency and nimbleness drops dramatically. Jeff Bezos of Amazon is famous for quipping, “Communication is terrible!” – in the sense that too much time is wasted in large teams. The “two pizza” rule begun in Amazon – where if a team grows larger than can be fed with two pizzas, its broken up – has been applied in many medium and large-sized organizations with close to universal success.

The sticking point here for most organizations is the implications of “cross functional”. Software development teams are offshoots of corporations after all; corporations and large industries were born from the Industrial Era. The DNA that we have inherited from that time of mass production, experimental science and creative innovation worked very well for its time – including grouping specialists together in functional groups. In software development teams however, that classical organizational structure works against us – each handoff from group to group assigned to a particular task lengthens the release cycle and strangles feedback. Again, we’ll cover this in greater detail later in the book – suffice to say, there is no substitute or workable alternative we know of to having a team responsible for its work in production. Efforts to form “virtual teams” where DBA’s, architects, testers, IT/Ops and developers resolves some problems around communication but the fact that each member has a different direct report or manager – and often different marching orders – creates the seeds for failure from the get-go.

We’re well aware that asking companies to change their structure wholesale from functional groups to a vertical structure supporting an app or service end-to-end is a mammoth undertaking. Some companies have made the painful but necessary leap in a mass effort; Amazon, Netflix, and Microsoft included. If your organization has massive problems – we’re talking an existential threat to survival, the kind that ensures enthusiastic buy-in from the topmost levels – and a strong, capable army of resources, this wartime-type approach may deliver for you. (See the Jody Mulkey interview in this book for a discussion on how this kind of a pivot can be structured and driven.) But a word of caution – speak to the survivors of these kinds of massive, wrenching transformations, and they’ll often mention the bumps and bruises they suffered that in retrospect could have been avoided. Often in most enterprises the successful approach is the slow and gradual one. More on this in a later chapter.

We hate how prescriptive Agile has become – and creating unnecessary or silly rules is one mistake that we don’t want to repeat. Over the past twenty years however, software teams in practice have finally caught up to the way cross functional units are built in the military, SWAT teams and elsewhere. It does appear to be a consistent guideline and a necessary component of DevOps – small teams are better than large ones, and efficient, nimble teams are usually 8 to 12 people in size.

Why is it important though that a team handles support in production? At an Agile conference in Orlando in 2011, one presenter made a very impactful statement – he said, “For DevOps to work, developers must care more about the end result – how the application is functioning in production. And Operations must care more about the beginning.” With siloed teams and separate areas of responsibility, there’s too much in the way of customer feedback, usage and monitoring data making their way back to the team producing features. Having the team be responsible for handling bug triage and end user support removes that barrier; this can be uncomfortable but in terms of keeping the team on point and delivering true business value – and adjusting as those priorities shift – there again is no substitute. It solves the problem mentioned at that conference – suddenly developers care, very much, about how happy their user base is and how features are running in production; Operations people, by being folded into the beginning and sharing the same focus and values as the project team, will be in a much better position to pass along valuable feedback so the team will stay on target.

In our experience, we’ve found very few companies where Agile transformations have not worked – in fact, we’ve never seen one fail. This is because the scope of Agile is limited to just the development portion; limiting the scope to just one group of people who often think and value the same things alike is a good recipe for a coherent mission and success. In contrast, DevOps efforts are fraught with peril. In the past five years, we’ve seen nearly a 50% failure rate; there is inevitably very strong resistance pockets even with strong executive and leadership support. DevOps has become both a controversial word over the past decade and a very disruptive and chancy – risky – organizational challenge.

Why the resistance? Part of it is due to the cross-cutting nature of development. For DevOps to work – really work – it requires a sea change of difference in how organizations are structured. Most medium to large sized orgs have teams organized horizontally in groups by function – a team of DBA’s, a team of devs, QA, Operations/IT, a project management and BSA layer, etc. This structure was thought to improve efficiency by grouping together specialists, and each is jealously guarded by loyalists and executives intent on protecting and expanding their turf. Most successful DevOps efforts we’ve seen require a vertical organization, where teams are autonomous and cross discipline. There are exceptions – some are mentioned in case studies in this book. But even with those exceptions, their adoption of DevOps has been slower than it could have been; eliminating these siloes appears to be a vital ingredient that can’t be eliminated from the recipe.

Another reason is that we are trying to “smoosh” together two groups with diametrically opposite goals and backgrounds. Operations teams are paid and rewarded based on stability; they are focused on high availability and reliability. Reliability and stability are on opposite ends of the spectrum from where development teams operate – change. Good development teams tend to focus on cool, bleeding-edge new technology its application in solving problems and delivering features for their customers – in short, change. This change is disruptive and puts at risk the stability and availability goals that IT organizations fixate on.

A culture of learning and customer engagement

This is another leaf off of the Agile tree, in this case the branches having to do with customer collaboration and responding to change. The signers of the original Agile Manifesto intended those last two principles to correct some known weaknesses of the old Gantt-dominated long-running projects; inflexible requirements that were hard-set as a contract at the beginning of a long-running project, leading to software not matching what their business partners were asking for. Perhaps the customers were really not sure what they wanted; perhaps their business objectives changed over the months code was in development.

Many so-called “scrum masters” get lost in the different ceremonies and artifacts around Agile and Scrum, and forget the key component of continual engagement with a stakeholder. Any true Agile team uses a sprint development cycle of a very short period of time – 1 to 4 weeks, the shorter the better – where at the end a team has a review with the business stakeholder to check on and correct their work. We knew we were going to be wrong at the end of the delivery cycle and the features we deliver wouldn’t meet the customer’s expectations. That’s OK – at least we could be wrong faster, after two weeks instead of 6 months. Checking in with the customer regularly is a must-have for Agile team; in retrospect, that continual engagement became the most powerful and uplifting component of Agile development.

Keeping a learning attitude leads to blame free postmortems – the single big common point. Is it safe to make changes? Do we learn?

Discipline and a high level of automation

One of the biggest antipatterns seen with scrum and Agile was the lack of a moat – anyone can (and did) fork over a thousand bucks for a quick course, call themselves “Scrum Certified”, and put up their shingle as an Agile SDLC consultant. Of the dozens of certified scrum masters I’ve met – shockingly few have ever actually written a line of code, or handled support in any form in a large enterprise.

Thankfully, I don’t see that happening with DevOps. You just can’t separate out coding, tools, and some level of programming and automation experience from running large-scale enterprise applications in production. So, tools are important.

Wrapping It Up

The four core DevOps principles we discuss above are – we believe – fundamental to any true DevOps culture. Removing or ignoring any one of these will limit your DevOps effectiveness, perhaps crippling it from inception.

For example, having an excessively large team will make the “turn radius” of your team too wide and cut down on efficiency. If the team is not responsible for the product end to end the feedback cycles will lengthen unacceptably, and too much time will be wasted fighting turf battles, trying to shove work between entrenched siloes with separate and competing priorities, and each team member comes into the project with a different perspective and operational goals. Any DevOps effort focused on “doing DevOps” and not on reducing release cycles and continuously delivering working software is fundamentally blinded in its vision. Not having the business engaged and prioritizing work creates waste as teams waste effort guessing on correct priorities and how to implement their features. Learning type organizations are friendlier to the amount of experimentation and risk required to weather the inevitable bumps and bruises as these changes are implemented and improved on. And without automation – a high level of automation both in building and deploying releases, executing tests, supporting services and applications in production and providing feedback from telemetry and usage back to the team – the wheels begin to fall off, very quickly.

In the case of DevOps, we believe there are certain common qualities that define a successful DevOps organization, which should be the end goal of any DevOps effort. There will be no DevOps Manifesto as we have with Agile –but success does seem to look very much the same, regardless of the enterprise. All DevOps families, it turns out, are very much alike.

|

Other Views of DevOps

|

|

There’s been many efforts to break DevOps down into a kind of taxonomy of species, and some stand out. For example, Seth Vargo of Google broke down DevOps into five foundational pillars:

- Reduce organizational siloes (i.e. shared ownership)

- Accept failure as normal

- Implement gradual change (by reducing the costs of failure)

- Leverage tooling and automation (minimizing toil)

- Measure everything

…. Which we find quite nifty, and covers the sweet spots.

A book we quite admire and quote from quite a bit is Accelerate, which lists some key capabilities, broken into five broad categories: Continuous Delivery, Architecture, Product and Process, Lean Management and Monitoring, and Culture. This is another very solid perspective on how DevOps looks in practice.

|

Continuous Delivery

|

Use VC for all production artifacts

Automate your deployment process

Continuous integration

Trunk-based development methods (fewer than 3 active branches, branches and forks having short lifetimes (<1 day), no “code loc” periods where no one can do pull requests/check out due to merging conflicts, code freezes, stabilization phases)

Test automation (reliable, devs primarily responsible)

Support test data management

Shift left on security

Continuous delivery (software in deployable state throughout lifecycle, team prioritizes this over any new work)

Includes visibility on deployability / quality to all members

The system can be deployed to end users at any time on demand

|

|

Architecture

|

Loosely coupled architecture; a team can test and deploy on demand without requiring orchestration

Empowered tools (I can choose my own tools)

|

|

Product and Process

|

Gather and implement customer feedback

Make the flow of work visible (i.e. value stream)

Work in small batches. MVP, rapid dev and frequent releases – enable shorter lead times and faster feedback loops

Foster and enable team experimentation

|

|

Lean management and monitoring

|

Lightweight change approval process

Monitor across application and infrastructure to inform business decisions

Check system health proactively

Improve processes and manage work with work-in-process (WIP) limits

Visualize work to monitor quality and communicate throughout the team. Could be dashboards or internal websites

|

|

Cultural

|

Support a generative culture (Westrum) – meaning good information flow, high cooperation and trust, bridging between teams, and conscious inquiry.

Encourage and support learning: Is learning thought of as a cost or an investment?

Support and facilitate collaboration among teams

Provide resources and tools that make work meaningful

Support or embody transformational leadership (vision, intellectual stimulation, inspirational communication, supportive leadership, personal recognition)

|

|

References

See https://theagileadmin.com/2010/10/15/a-devops-manifesto/, a very good effort but in our opinion missing a few key pieces.

See https://devops.com/no-devops-manifesto/ – Christopher Little, writing in May 2016, feels very strongly that the very idea of a DevOps Manifesto is too rule-oriented and makes the curious argument that if one existed it would prevent any kind of meaningful dialogue. It’s an interesting position by a good writer, without much if any supporting evidence.

[debois] – http://www.jedi.be/blog/2010/02/12/what-is-this-devops-thing-anyway/

[kim3] – The top 11 things you need to know about DevOps’, Gene Kim

[donovan] – http://donovanbrown.com/post/what-is-devops

[do2] – (http://dev2ops.org/2010/02/what-is-devops/)

[agileadmin] – (https://theagileadmin.com/what-is-devops/)

[garntner] – (https://www.gartner.com/it-glossary/devops)

[itskeptic] – Rob England, “Define DevOps: What is DevOps?” – 11/29/2014, http://www.itskeptic.org/content/define-devops

[mug2] – Ken Mugrage, “My Definition of DevOps”, https://kenmugrage.com/2017/05/05/my-new-definition-of-devops/#more-4 – Note, Ken seems to agree with us that a one-size-fits-all definition isn’t of value: “It’s not important that the “industry” agree on a definition. It would be awesome, but it’s not going to happen. It’s important that your organization agree (or at least accept) a shared definition.”

[sre] Change Management SRE has found that roughly 70% of outages are due to changes in a live system. Best practices in this domain use automation to accomplish the following: Implementing progressive rollouts Quickly and accurately detecting problems Rolling back changes safely when problems arise This trio of practices effectively minimizes the aggregate number of users and operations exposed to bad changes. By removing humans from the loop, these practices avoid the normal problems of fatigue, familiarity/ contempt, and inattention to highly repetitive tasks. As a result, both release velocity and safety increase.